Kara Manke, UC Berkeley

John Schulman cofounded the ambitious software company OpenAI in December 2015, shortly before finishing his Ph.D. in electrical engineering and computer sciences at UC Berkeley. At OpenAI, he led the reinforcement learning team that developed ChatGPT — a chatbot based on the company’s generative pre-trained (GPT) language models — which has become a global sensation, thanks to its ability to generate remarkably human-like responses.

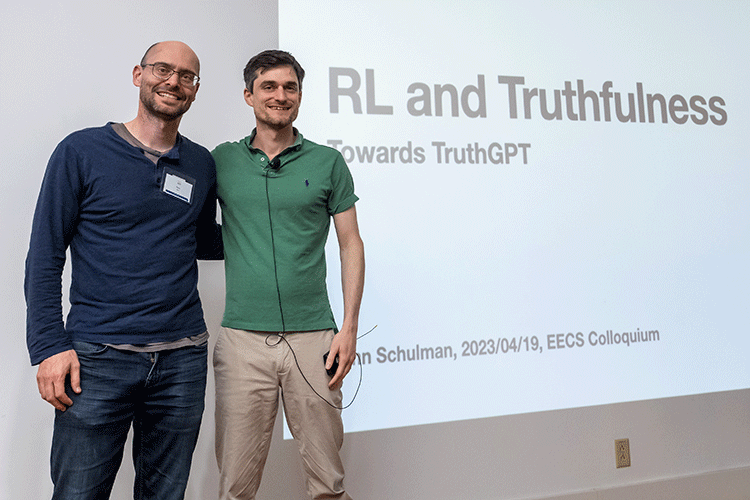

During a campus visit on Wednesday, Berkeley News spoke with Schulman about why he chose Berkeley for graduate school, the allure of towel-folding robots, and what he sees for the future of artificial general intelligence.

The interview below has been edited for length and clarity.

Q: You studied physics as an undergraduate at the California Institute of Technology and initially came to UC Berkeley to do a Ph.D. in neuroscience before switching to machine learning and robotics. Could you talk about your interests and what led you from physics to neuroscience and then to artificial intelligence?

A: Well, I am curious about understanding the universe, and physics seemed like the area to study for that, and I admired the great physicists like Einstein. But then I did a couple of summer research projects in physics and wasn’t excited about them, and I found myself more interested in other topics. Neuroscience seemed exciting, and I was also kind of interested in AI, but I didn’t really see a path that I wanted to follow [in AI] as much as in neuroscience.

When I came to Berkeley in the neuroscience program and did lab rotations, I did my last one with Pieter Abbeel. I thought Pieter’s work on helicopter control and towel-folding robots was pretty interesting, and when I did my rotation, I got really excited about that work and felt like I was spending all my time on it. So, I asked to switch to the EECS (electrical engineering and computer sciences) department.

Q: Why did you choose Berkeley for graduate school?

A: I had a good feeling about it, and I liked the professors I talked to during visit day.

I also remember I went running the day after arriving, and I went up that road toward Berkeley Lab, and there was a little herd of deer, including some baby deer. It was maybe 7:30 in the morning, and no one else was out. So, that was a great moment.

Q: What were some of your early projects in Pieter Abbeel’s lab?

A: There were two main threads in [Abbeel’s] lab — surgical robotics and personal robotics. I don’t remember whose idea it was, but I decided to work on tying knots with the PR2 [short for personal robot 2]. I believe [the project] was motivated by the surgical work — we wanted to do knot tying for suturing, and we didn’t have a surgical robot, so I think we just figured we would use the PR2 to try out some ideas. It’s a mobile robot, it’s got wheels and two arms and a head with all sorts of gizmos on it. It’s still in Pieter’s lab, but not being used anymore — it’s like an antique.

Q: As a graduate student at Berkeley, you became one of the pioneers of a type of artificial intelligence called deep reinforcement learning, which combines deep learning — training complex neural networks on large amounts of data — with reinforcement learning, in which machines learn by trial and error. Could you describe the genesis of this idea?

A: After I had done a few projects in robotics, I was starting to think that the methods weren’t robust enough — that it would be hard to do anything really sophisticated or anything in the real world because we had to do so much engineering for each specific demo we were trying to make.

Around that time, people had gotten some good results using deep learning and vision, and everyone who was in AI was starting to think about those results and what they meant. Deep learning seemed to make it possible to build these really robust models by training on lots of data. So, I started to wonder: How do we apply deep learning to robotics? And the conclusion I came to was reinforcement learning.

Q: You became one of the co-founders of OpenAI in late 2015, while you were still finishing your Ph.D. work at Berkeley. Why did you decide to join this new venture?

A: I wanted to do research in AI, and I thought that OpenAI was ambitious in its mission and was already thinking about artificial general intelligence (AGI). It seemed crazy to talk about AGI at the time, but I thought it was reasonable to start thinking about it, and I wanted to be at a place where that was acceptable to talk about.

Q: What is artificial general intelligence?

A: Well, it’s become a little bit vague. You could define it as AI that can match or exceed human abilities in basically every area. And seven years ago, it was pretty clear what that term was pointing at because the systems at the time were extremely narrow. Now, I think it’s a little less clear because we see that AI is getting really general, and something like GPT-4 is beyond human ability in a lot of ways.

In the old days, people would talk about the Turing test as the big goal the field was shooting for. And now I think we’ve sort of quietly blown past the point where AI can have a multi-step conversation at a human level. But we don’t want to build models that pretend they’re humans, so it’s not actually the most meaningful goal to shoot for anymore.

Q: From what I understand, one of the main innovations behind ChatGPT is a new technique called reinforcement learning with human feedback (RLHF). In RLHF, humans help direct how the AI behaves by rating how it responds to different inquires. How did you get the idea to apply RLHF to ChatGPT?

A: Well, there had been papers about this for a while, but I’d say the first version that looked similar to what we’re doing now was actually a paper from OpenAI, “Deep reinforcement learning from human preferences,” whose first author is actually another Berkeley alum, Paul Christiano, who had just joined the OpenAI safety team. The OpenAI safety team had worked on this effort because the idea was to align our models with human preference — try to get [the models] to actually listen to us and try to do what we want.

That first paper was not in the language domain, it was on Atari and simulated robotics tasks. And then they followed that up with work using language models for summarization. That was around the time GPT-3 was done training, and then I decided to jump on the bandwagon because I saw the potential in that whole research direction.

Q: What was your reaction when you first started interacting with ChatGPT? Were you surprised at how well it worked?

A: I would say that I saw the models gradually change and gradually improve. One funny detail is that GPT-4 was done training before we released ChatGPT, which is based on GPT-3.5 — a weaker model. So at the time, no one at OpenAI was that excited about ChatGPT because there was this much stronger, much smarter model that had been trained. We had also been beta testing the chat model on a group of maybe 30 or 40 friends and family, and they basically liked it, but no one really raved about it.

So, I ended up being really surprised at how much it caught on with the general public. And I think it’s just because it was easier to use than models they had interacted with before of similar quality. And [ChatGPT] was also maybe slightly above threshold in terms of having lower hallucinations, meaning it made less stuff up and had a little more self-awareness. I also think there is a positive feedback effect, where people show each other how to use it effectively and get ideas by seeing how other people are using it.

Q: The success of ChatGPT has renewed fears about the future of AI. Do you have any concerns about the safety of the GPT models?

A: I would say that there are different kinds of risks that we should distinguish between. First, there’s the misuse risk — that people will use the model to get new ideas on how to do harm or will use it as part of some malicious system. And then there’s the risk of a treacherous turn, that the AI will have some goals that are misaligned with ours and wait until it’s powerful enough and try to take over.

For misuse risk, I’d say we’re definitely at the stage where there is some concern, though it’s not an existential risk. I think if we released GPT-4 without any safeguards, it could cause a lot of problems by giving people new ideas on how to do various bad things, and it could also be used for various kinds of scams or spam. We’re already seeing some of that, and it’s not even specific to GPT-4.

As for the risk of a takeover or treacherous turn, it’s definitely something we want to be very careful about, but it’s quite unlikely to happen. Right now, the models are just trained to produce a single message that gets high approval from a human reader, and the models themselves don’t have any long-term goals. So, there’s no reason for the model to have a desire to change anything about the external world. There are some arguments that this might be dangerous anyway, but I think those are a little far-fetched.

Q: Now that ChatGPT has in many ways passed the Turing test, what do you think is the next frontier in artificial generalized intelligence?

A: I’d say that AIs will keep getting better at harder tasks, and tasks that used to be done by humans will gradually fall to being able to be done by a model perfectly well, possibly better. Then, there will be questions of what should humans be doing — what are the parts of the tasks where the humans can have more leverage and do more work with the help of models? So, I would say it’ll just be a gradual process of automating more things and shifting what people are doing.