Andy Fell, UC Davis

William Wootton was born profoundly deaf, but thanks to cochlear implants fitted when he was about 18 months old, the now 4-year-old Granite Bay preschooler plays with a keyboard synthesizer and reacts to the sounds of airplanes and trains, while still learning American Sign Language.

“He has done extremely well,” said William’s mother, Jody Wootton. “He really appreciates music and is learning to speak.”

First approved for adults in the 1980s, cochlear implants have been used by hundreds of thousands of people worldwide. The implant bypasses most of our normal hearing process, electronically connecting a microphone directly to the cochlear, the structure in the inner ear that collects nerve signals from the ear and sends them to the brain.

But not all children respond as well as William to the implants.

“Cochlear implants are very successful for some kids, but we don’t understand why some kids do well and not others,” said Professor David Corina of the Center for Mind and Brain at the University of California, Davis.

Supported by a five-year grant from the National Institutes of Health, Corina and Lee Miller, associate professor of neurobiology, physiology and behavior at UC Davis, are working to understand why some children respond better to the implants than others.

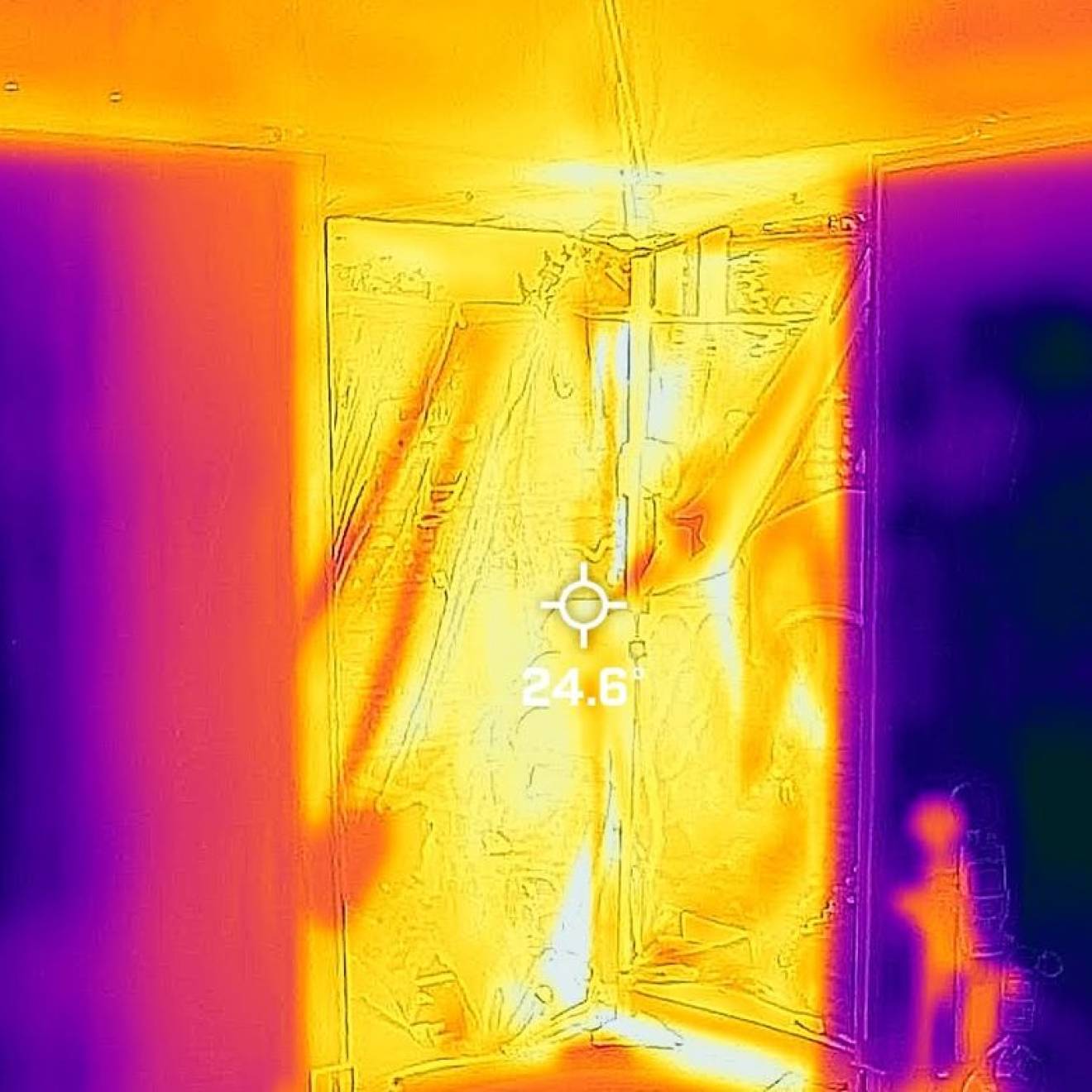

"Balance of power" between auditory and visual brain areas

Credit: UC Davis

One idea is that areas of the brain that are not being used, such as the auditory cortex in profoundly deaf children, get taken over for other functions, such as visual processing. When the child gets an implant, that part of the brain is no longer available to support hearing.

“We’re using measures of brain function to get a snapshot of the ‘cerebral balance of power’ and how it is influencing auditory and visual experiences,” Corina said. The ultimate goal is to identify clinical interventions that would help children better adapt to using cochlear implants, Miller said.

Now about a year into the study, Corina and Miller are recruiting children from 18 months to 8 years old who use cochlear implants, as well as hearing children in the same age group. They use electroencephalography, or EEG, to measure brain activity during visual and auditory processing.

During the experiment, the children watch a cartoon while a mixture of specially designed speech is played to them. The speech is designed to elicit responses from the different levels of processing in the auditory system, “from the ear to deep cortex,” Corina said.

“It takes time for speech to move through the auditory system and there are different levels at which the visual system could interfere, if it does,” he said.

The researchers plan to recruit about 60 children a year into the study, which began in 2015, and follow them for five years to track their progress.

Bilingual in sign language

Many American children grow up with more than one spoken language. Is adding a signed language any different?

“For some kids, their first language may be signed,” Corina said. “How does this affect cerebral balance?”

The important thing is that children grow up linguistically capable in whichever languages they use, he said.

William, for example, is now in a preschool program at Ophir Elementary School near Auburn, which uses American Sign Language in addition to English. So far, he’s embracing both spoken and signed languages and transitions between the two, his mother said.

“We’re absolutely pleased to have got the implants. It’s really changed our lives and changed his life,” she said.

The researchers have collaborated with the Weingarten Children’s Center, Redwood City, California; CCHAT Center, Sacramento; the Hearing Speech and Deafness Center, Seattle; the California School for the Deaf, Fremont, California; and The Learning Center for the Deaf, Boston; in addition to financial support from the National Institute on Deafness and other Communication Disorders (part of the National Institutes of Health).