Kim McDonald, UC San Diego

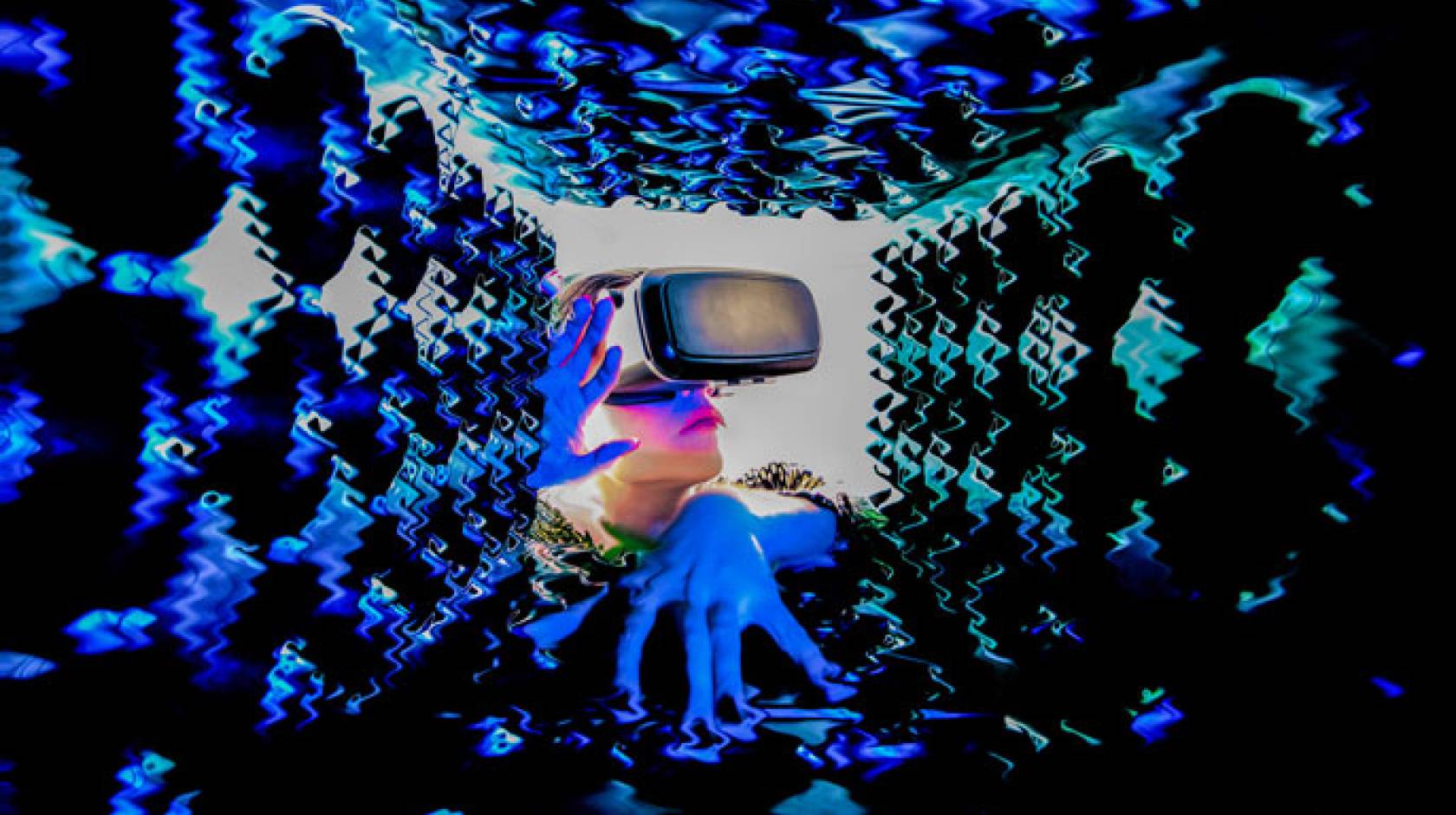

A team of researchers at UC San Diego and San Diego State University has developed a pair of “4-D goggles” that allows wearers to be physically “touched” by a movie when they see a looming object on the screen, such as an approaching spacecraft.

Credit: Ching-fu Chen

The device was developed based on a study conducted by the neuroscientists to map brain areas that integrate the sight and touch of a looming object and aid in their understanding of the perceptual and neural mechanisms of multisensory integration.

But for the rest of us, the researchers said, it has a more practical purpose: The device can be synchronized with entertainment content, such as movies, music, games and virtual reality, to deliver immersive multisensory effects near the face and enhance the sense of presence.

The advance is described in a paper published online February 6 in the journal Human Brain Mapping by Ruey-Song Huang and Ching-fu Chen, neuroscientists at UC San Diego’s Institute for Neural Computation, and Martin Sereno, the former chair of neuroimaging at University College London and a former professor at UC San Diego, now at San Diego State University.

“We perceive and interact with the world around us through multiple senses in daily life,” said Huang. “Though an approaching object may generate visual, auditory, and tactile signals in an observer, these must be picked apart from the rest of world, originally colorfully described by William James as a ‘blooming buzzing confusion.’ To detect and avoid impending threats, it is essential to integrate and analyze multisensory looming signals across space and time and to determine whether they originate from the same sources.”

In the researchers’ experiments, subjects assessed the subjective synchrony between a looming ball (simulated in virtual reality) and an air puff delivered to the same side of the face. When the onset of ball movement and the onset of an air puff were nearly simultaneous (with a delay of 100 milliseconds), the air puff was perceived as completely out of sync with the looming ball. With a delay between 800 to 1,000 milliseconds, the two stimuli were perceived as one (in sync), as if an object had passed near the face generating a little wind.

In experiments using functional Magnetic Resonance Imaging, or fMRI, the scientists delivered tactile-only, visual-only, tactile-visual out-of-sync, and tactile-visual in-sync stimuli to either side of the subject’s face in randomized events. More than a dozen of brain areas were found to respond more strongly to lateralized multisensory stimuli than to lateralized unisensory stimuli, the scientists reported in their paper, and the response was further enhanced when the multisensory stimuli are in perceptual sync.

The research was supported by the National Institutes of Health (R01 MH081990), a Royal Society Wolfson Research Merit Award (UK), Wellcome Trust (UK), and a UC San Diego Frontiers of Innovation Scholars Program Project Fellowship.