Sonia Fernandez, UC Santa Barbara

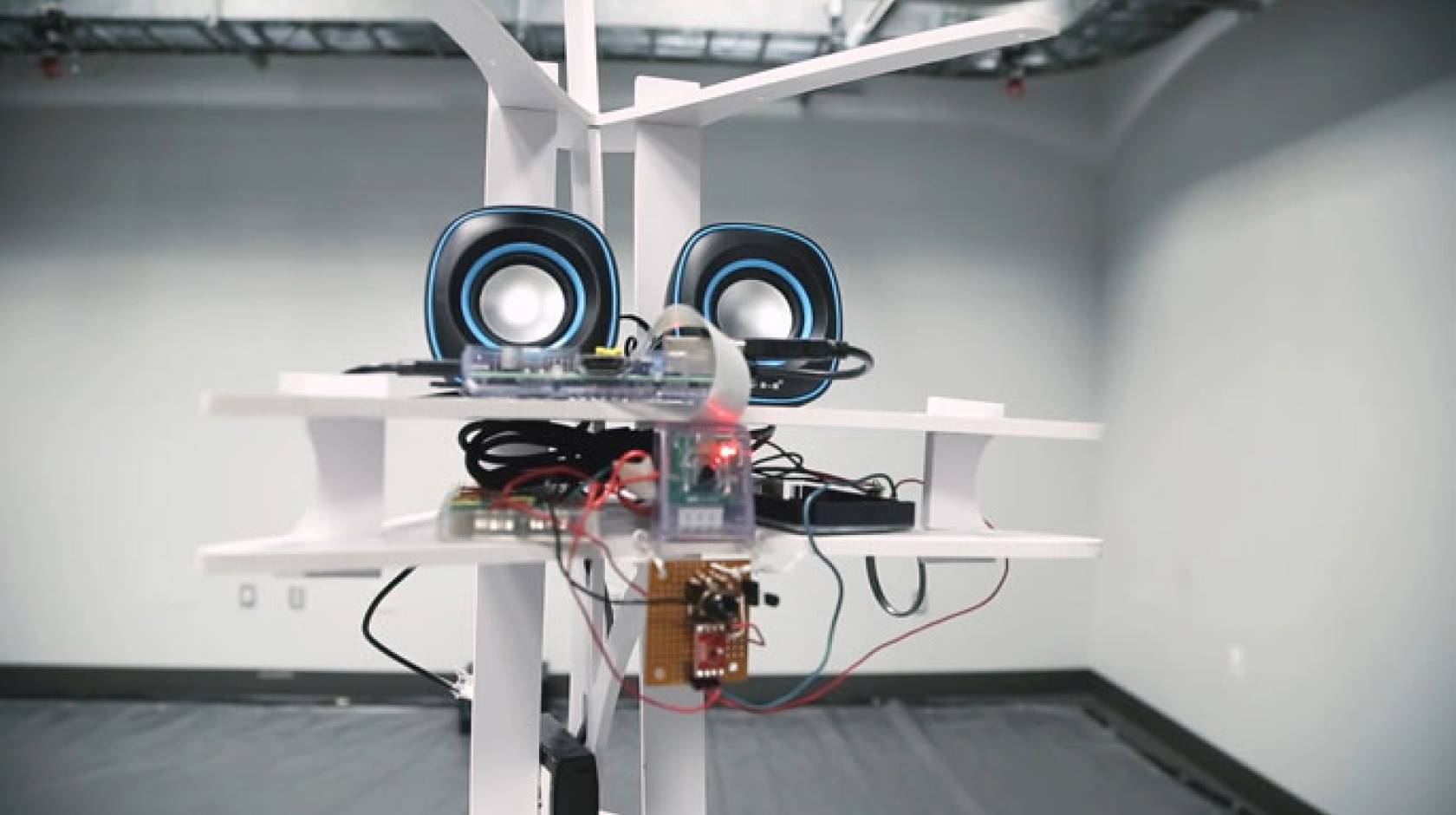

Meet ROVER. If you’re lucky — and trigger its heat sensors — it’ll roll up to you and sing a song.

This friendly robot is the creation of Hannah Wolfe, a graduate student researcher in UC Santa Barbara’s Media Arts and Technology program, who’s investigating robot-human interactions.

“I wanted to create an interactive art installation that would make people happy,” said Wolfe, who is based in the rather austere environment at UC Santa Barbara’s Elings Hall. “I thought if something came up to people and sang to them that would bring joy into the space.”

With a Roomba (sans vacuum) as its feet, a Raspberry Pi as its brain and an Arduino acting as a nervous system, Rover is more than an artistic endeavor, however. It is also a tool for investigating the ways humans respond to robots.

Think about it: We ask iPhone’s Siri or Amazon’s Alexa for help accessing information, making purchases or operating Bluetooth-enabled devices in our homes. We have given robots jobs that are too tedious, too precise or too dangerous for humans. And artificial intelligence is now becoming the norm in everything from recommendations in music and video streaming services to medical diagnostics.

“Whether we like it or not, we’re going to be interacting with robots,” Wolfe said. “So, we need to think about how we will interact with them and how they will convey information to us.”

To that end, Wolfe has ROVER generate sounds — beeps and chirps and digital effects (think R2D2) — that she found elicit positive versus negative reactions from people. Meanwhile an onboard camera records how individuals respond to ROVER.

“I looked through the literature on what kind of audio qualities express different emotions in linguistics and music research,” Wolfe explained. While some research focuses on how to compose these sounds, she said, there weren’t generative algorithms for sounds that could reliably convey the intended emotion — sounds that may be different, but could impart the same meaning.

The results of her research were somewhat surprising: In comparison to computers, people found themselves more emotionally aroused by their desktops and laptops than by a robot that serenaded them.

“That was definitely against my expectations,” Wolfe said, noting it could be due to a variety of reasons, not the least of which is the proximity people have to their computers.

“A computer is in our personal space — something that we touch — and having it in your personal space is potentially more threatening or exciting than something in your social space,” said Wolfe. ROVER, by contrast, is programmed to keep a polite distance from the person to whom it is singing, and therefore the lack of intimacy may result in relatively less arousal.

Wolfe’s research is one of many ongoing forays into the nature of human-robot interactions, a research field that is growing in prominence as automatons become increasingly sophisticated and share more space with people. Wolfe points to Jibo, a commercially available “social robot” created by Massachusetts Institute of Technology media arts professor (and UC Santa Barbara alumna) Cynthia Breazeal.

“Jibo is pretty much Siri with a face that looks at you and talks,” Wolfe said. “It doesn’t move around the space, but it is the beginning of social robots in the home.”

Beware the ‘uncanny valley’

One of the most longstanding areas of research in robotics — and also one of its pitfalls — is a strange phenomenon that occurs as androids begin to more closely resemble their human creators.

“If something becomes too real but not quite real enough it falls into the uncanny valley,” said Wolfe. Originally a Japanese term coined in 1970 and loosely translated into English, “uncanny valley” refers to feelings of strangeness, coldness, eeriness and even disgust that arise in people when a robot begins to blur the line between machine and living, breathing human. Star Wars’ C-3PO, for instance, has an obviously robotic build but a very human affect, which makes him harmless and endearing. Hanson Robotics’ AI-powered Sophia, on the other hand, is far more lifelike but not quite human, making her fascinating for some, creepy for others.

“There’s been a lot work trying to figure out what exactly it is, where things fall into it and how you can kind of get around it using design,” Wolfe said. “I’ve started looking at playing with emotive gibberish, and the sounds that ROVER makes are kind of creepy and gross, which is interesting for art, but inappropriate for social robotics.” For that reason, she focuses more on beeps and notes and digital effects as opposed to speech.

However, this won’t stop Wolfe from exploring the notion of humans’ mental discomfort with almost-humans, albeit in a playful manner. She has shown ROVER speaking gibberish at the 2017 International Symposium of Electronic Arts and is planning for another exhibition.

“I’ll be working with Sahar Sajadieh, a performance artist in Media Arts and Technology. We were talking about having ROVER approach people and either hit on them or insult them,” she said. “We haven’t decided which.”

***

Hannah Wolfe, Marko Peljhan, Yon Visell, Singing robots: How embodiment affects emotional responses to non-linguistic utterances. IEEE Transactions on Affective Computing, In Press, 2017