Laura Smith, California Magazine

In September of last year, a startling headline appeared on the Guardian’s website: “A robot wrote this entire article. Are you scared yet, human?” The accompanying piece was written by GPT-3, or Generative Pre-trained Transformer 3, a language-generating program from San Francisco–based OpenAI, an artificial intelligence research company whose founders include Tesla billionaire Elon Musk and UC Berkeley Ph.D. John Schulman. “The mission for this op-ed is perfectly clear,” the robotic author explained to readers. “I am to convince as many human beings as possible not to be afraid of me. Stephen Hawking has warned that AI could ‘spell the end of the human race.’ I am here to convince you not to worry. Artificial intelligence will not destroy humans. Believe me.

“I taught myself everything I know just by reading the internet, and now I can write this column,” it continued. “My brain is boiling with ideas!” It ended loftily, quoting Gandhi: “‘A small body of determined spirits fired by an unquenchable faith in their mission can alter the course of history.’ So can I.”

As I write this article, phantom text appears ahead of my cursor like a spouse completing my sentences. Often it is right. Which, like the spouse, is annoying.

Public reaction was fairly mixed. Some expressed excitement at this advancement in technology. Others were wary. “Bet the Guardian’s myriad lifestyle columnists are pretty nervous right now,” one commenter wrote. I didn’t know about the Guardian’s columnists, but I sure was nervous. How could a machine have written this well, and how long before my obsolescence? Scared yet? Absolutely.

But many of those who had a greater understanding of natural language processing systems argued that my fear was unfounded. To say that GPT-3 wrote the op-ed was misleading, they insisted: An article like this required an enormous amount of human labor, both to build the program itself and to stitch together the outputs. “Attributing [the Guardian article] to AI is sort of like attributing the pyramids to the Pharaoh,” programmer-poet Allison Parrish ’03 told my cohost Leah Worthington and me in a recent episode of California magazine’s podcast, The Edge. “Pharaoh didn’t do that. The workers did.”

And indeed, a few days after the original op-ed, the Guardian acknowledged as much in a follow-up letter called “A human wrote this article. You shouldn’t be scared of GPT-3.” The author, Albert Fox Cahn, argued that while GPT-3 is “quite impressive … it is useless without human inputs and edits.”

Liam Porr wouldn’t argue with that. Porr was the UC Berkeley undergraduate in computer science who fed GPT-3 the prompts necessary to generate the Guardian piece. As he explained on our podcast, “All the content in the op-ed was taken from output of GPT-3, but not verbatim. It generated several outputs. And then the Guardian editors took the best outputs and spliced them together into this one large op-ed.” Still, according to Porr, the Guardian editors reported that the process was easier than, or at least comparable to, working with a human writer.

What happens to art, I wondered, when our muses become mechanical, when inspiration is not divine, but digital?

Regardless of who put in the elbow grease, AI has brought about a new frontier of language generation, as many of us are aware. As I write this article, phantom text appears ahead of my cursor like a spouse completing my sentences. Often it is right. Which, like the spouse, is annoying. This is now standard, everyday word processing. But in recent years, some have been taking it further, actively using language-generating AI to craft literature. In 2016, a team of UC Berkeley graduate students created a sonnet-generating algorithm called Pythonic Poet and won second place in Dartmouth’s PoetiX competition in which poems are judged by how “human” they seem. At the time, poet and Cal grad Matthew Zapruder, rejected the idea that a machine could write great poetry. He told California, “You can teach a computer to be a bad poet, doing things you expect to be done. But a good poet breaks the rules and makes comparisons you didn’t know could be made.”

But Parrish, an assistant arts professor at NYU who uses AI to craft verse, argues that computer-generated poetry is a new frontier in literature, allowing for serendipitous connections beyond anything human brains can create.

It was an intriguing idea, but what happens to art, I wondered, when our muses become mechanical, when inspiration is not divine, but digital?

Parrish has been programming roughly since she was in kindergarten. In the third grade, her father gave her “The Hobbit,” by J.R.R. Tolkien. Tolkien was a philologist who invented a series of Elvish languages, which were constructed with aesthetic pleasure in mind, rather than just function. Parrish’s love of language blossomed under Tolkien’s influence. “I think sort of inevitably, as my interest in computer programming deepened, and as my interest in language deepened, they kind of came together to form this Voltron of being interested in computer-generated poetry,” she said. (Voltron, incidentally, is an animated robotic superhero, “loved by good, feared by evil,” that gains strength by combining with other robots.)

Now she creates poetry using a database of public domain texts called Project Gutenberg and a machine learning model that pairs lines of poetry with similar phonetics. In using computational technology to create prose, the idea, Parrish said, “is to create an unexpected juxtaposition. We are limited when we’re thinking about writing in a purely intentional way. We’re limited in the kinds of ideas that we produce. So instead, we roll the dice. We create a system of rules. We follow that system of rules in order to create these unexpected juxtapositions of words, phrases, lines of poetry that do something that we would be incapable of doing on our own…. There’s untapped potential for things that might bring us joy in what we can do with our linguistic capacity.”

The way AI writes, by finding patterns and connections between texts, is not that different from how we do it, but computers are quicker and can draw from a vast universe of digitized information.

What if, armed with beautiful machines, writers could transcend the idea of authorship, even unravel the mysteries of the creative process? That could be revolutionary.

“A single human can’t read the whole web, but a computer can,” said John DeNero, a former Ph.D. and assistant teaching professor at the UC Berkeley Artificial Intelligence Research Lab. We mere mortals must rely on the comparably small set of data points that we read or experience over the course of our brief lives. “Effectively, [GPT-3] is set up to memorize all the text on the web,” DeNero said. In this way, GPT-3 could be considered deeply human. It is drawing from a data set of countless human voices.

Algorithms (really just a set of rules) have a long history in creative writing. Parrish cites as an example the “I Ching,” an ancient Chinese divination text that describes flipping coins and interpreting the meaning of those coins. Or take Tristan Tzara’s instructions for making a Dada poem, in which he advised cutting an article into individual words, throwing the words into a bag, and drawing them out at random. Even in more formal, less strictly experimental writing, writers have often relied on somewhat random rules as a means to create, from sonnets’ use of iambic pentameter to haikus with their 5-7-5 syllable structure.

As any writer who has found herself frozen before a blank page knows, the creative process contains a contradiction: While total freedom can be paralyzing, structure can be freeing and rigid rules can result in groundbreaking work. I left our podcast conversation with Parrish feeling stodgy and unimaginative, but also eager to try my hand at something more experimental.

Writing, arguably, hasn’t experienced any major evolutionary steps since word processing sped up the transfer of thoughts from brain to page, or since the internet widened our access to information. What if, armed with beautiful machines, writers could push their artform beyond its current boundaries, transcend the idea of authorship, even unravel the mysteries of the creative process? That could be revolutionary. And yet, we’ve long accepted the idea that stories come from a “force” outside of us. By John Milton’s own account, he wasn’t the author of “Paradise Lost.” He claimed it was dictated to him by his “celestial patroness” while he slept. He would emerge from his slumbers with the fully formed epic poem ready to be announced to the closest person with a pen. When he tried to write while awake, without his muse, nothing came. The feeling of words and ideas flowing through you is one of the most gratifying experiences a writer can have. Who’s to say a muse couldn’t be mechanical?

Porr and Parrish both suggested programs to tap the mechanical muse. While GPT-3 is not available for use by the general public, according to Porr, I could interact with it through a story-generating game called AI Dungeon, run by Latitude, a company purporting to make “AI a tool of creativity and freedom for everyone.” Using the program, I could enter a line of text and it would produce a follow-up line that would presumably advance the plot. It’s like the writing game “exquisite corpse,” where a group of writers passes around a folded piece of paper on which only the previous line is visible as they construct the next line, but in this case, my co-conspirator was a computer program, trawling the internet for patterns in human-generated text, alchemizing gigabytes of language from the web into narrative.

Upon entering into the “game,” the program asks for a sentence describing the character and an action to set them in motion. I tried out a few different options. “You are a young woman crying on a park bench,” I wrote. “At first you don’t notice the old man approach the bench and sit down.” The program responded:

“I’m sorry about your sister,” he says, “she was very sick.”

I was impressed. The program had made the connection that a dead sister was something to cry over. I entered another line: “You ask him how he knew your sister.” It responded:

The old man looks at you with pity in his eyes. He doesn’t say anything else but stares at you as if he is trying to read your mind.

Hmm. I would come to see this as typical of the program. It often seemed to dodge adding new information to the story line. My prompts of “Who are you?” would be met with a circular logic like “but who are you?” or “I think you know who I am.” Not only did it fail to advance the plot in a meaningful direction, but the language itself was pedestrian.

Parrish had a similar complaint about GPT-3. “Really, the only purpose of a model like that is to produce language that resembles, as closely as possible, conventionally composed language. … As a poet, I’m trying to create unconventional language. So, in fact, this tool is useless.” Her own language-generating program, which took countless hours to write, isn’t intended to create meaning in the poems it generates. It’s not about story, but about producing new combinations of words and sounds, and the “pleasure of what happens in your mouth … which is actually a tremendous pleasure. It’s fun to talk, and that’s part of the reason it’s fun to read poetry.”

Parrish’s poetry is, above all, delightful to the ears. She read some of it on the podcast:

Sweet hour of prayer, sweet hour of prayer it was the hour of prayers. In the hour of parting, hour of parting, hour of meeting hour of parting this. With power avenging, … His towering wings; his power enhancing, in his power. His power.

“It’s not about anything,” she insisted. “There’s no aboutness involved in the composition of this poem, really, in any way.” That kind of abstruseness may work for poetry, but narrative requires aboutness. My attempts to use the program to inject serendipity into the story had me running in circles. Instead of coming up with something startling, I found myself in a linguistic hall of mirrors.

As DeNero explained, GPT-3 wasn’t really imagined for my purpose. “The big useful breakthroughs are not in storytelling,” he said. Natural language processing systems “just parrot back what they were trained on. … It’s full of all kinds of clever stuff, but there isn’t a whole lot of originality that it’s generating.”

Sometimes the responses made no sense at all. In one instance, a character inexplicably walked into the room with “backwards camouflage pants.” In another, a character in a full body cast “turns around” to see someone behind him. The basic facts of what it’s like to have a body and move through the world were understandably lost on the incorporeal AI. Later, the program suggested that the body-casted character “try to sit up.”

I moved lines around, deleted whole sections. In short, it wasn’t the beautiful serendipity machine I was hoping it would be.

Occasionally, the program did come up with something original. Once, when I introduced a character who was a professor at Berkeley who heard a knock at his door, the program introduced another character named “Professor Jonathan Westfield,” also from UC Berkeley. (I checked: There is no Jonathan Westfield at the University, but there is a Jonathan Phillips at Westfield State University.)

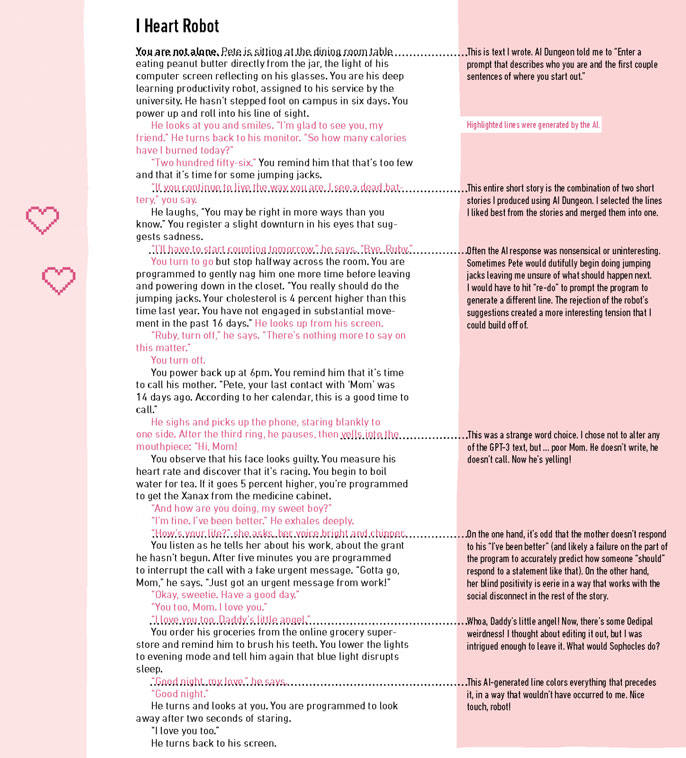

Then, in the story below, there was a turn that felt both fresh and moving: The program produced a line where the human in the story, Pete, said, “Goodnight, my love,” to his robot. The robot, which has been programmed to boost Pete’s productivity, has been ministering to him with the patient devotion of a spouse all day. But she is not a spouse. Pete is alone. By calling her “my love,” the scene is transformed into a touching, even tragic, portrait of modern loneliness. Of course, I was reading into it; the program had intended nothing, knew nothing of human graspings for love. It just knew that often, when humans say goodnight, they use terms of endearment as well. Still, it was a nice touch.

The text you see below was highly edited. When the program produced a prompt I didn’t like or thought was nonsense, I hit the redo button and made it try again. Often I did this several times in a row, eliciting this response: “The AI doesn’t know what to say. Alter, undo, or try again.” I moved lines around, deleted whole sections. In short, it wasn’t the beautiful serendipity machine I was hoping it would be.

Before writing the Guardian piece, Porr posted a self-help article on his blog that he had composed with the help of GPT-3. Most people hadn’t realized it was written by a robot because it looked like every other self-help post on the internet:

In order to get something done, maybe we need to think less. Seems counter-intuitive, but I believe sometimes our thoughts can get in the way of the creative process. We can work better at times when we “tune out” the external world and focus on what’s in front of us.

Porr explained that language-generation programs are already being used for formulaic writing; sports reporting, for example, the kind of writing that reports numbers but doesn’t require a lot of analysis. That may not seem like a real threat to the foundations of journalism, but Porr thinks it could eventually chip away at already understaffed newsrooms. “If you have a content website like BuzzFeed,” Porr said, “and you have 700 writers, and you can make half of those writers 50 percent more efficient, then you can save upwards of $3 million a year.” Of course, “efficiency” and “saving money” probably means firing people.

“Soon we’re going to see a lot of the creative spaces become automated,” he said. Still, he is confident that these programs won’t replace human writers but will instead “raise the bar for content.” The mediocre but necessary content will be written by AI, and this will “up people’s threshold for the value of the content that they read online. So that means that the barrier to entry is a lot higher as a writer,” and that “people are going to expect higher-value writing from a person.” Maybe so. Either that or we’ll soon be so flooded with so much mediocre content that we’ll forget what beautiful is.

In discussions about this piece among the California editorial team, we kept coming back to the same question: What is missing from computer-generated art? We decided it was something along the lines of “soul,” or “humanity.”

Perhaps this is what is so dissatisfying about AI-generated art — the fact that there is no one to grow intimate with, no empathetic flow between two living, breathing beings.

In Cal dropout Philip K. Dick’s “Do Androids Dream of Electric Sheep?,” androids are virtually indistinguishable from humans, save for one quality: empathy. “An android, no matter how gifted as to pure intellectual capacity, could make no sense out of the fusion which took place routinely among the followers of Mercerism” (a fictional religion devoted to empathy).

Dick’s assertion that empathy is humanity’s distinguishing trait recalls the British novelist Henry Green, who said, “Prose should be a direct intimacy between strangers.” Perhaps this is what is so dissatisfying about AI-generated art — the fact that there is no one to grow intimate with, no empathetic flow between two living, breathing beings who will likely never meet and may not even be alive at the same time, but at least share this.

“There’s lots we don’t understand about natural language generation still, like exactly how the human mind works and how it turns thought into language,” said DeNero. Machines may have access to nearly infinite knowledge, yet our meaning-making capabilities are far superior. But if, for now, AI-generated art strikes us as uninspired, it’s also true that, through machine learning, the technology is constantly improving itself, leaving open the possibility that one day, it could learn to empathize, to synthesize this thing we call soul. Until then, we are the beautiful machines.

Laura Smith is the deputy editor of California magazine and the author of the book “The Art of Vanishing.”

What follows is a literary experiment. Wondering if artificial intelligence could be used as a tool to supplement human creativity, I wrote a short story “in collaboration” with a site called AI Dungeon, which uses the machine learning program GPT-3 to generate text. I would enter a line of text and the AI would produce the next line. Below is an annotated version of the story that explains the editing process.

Credit: Art by Michiko Toki