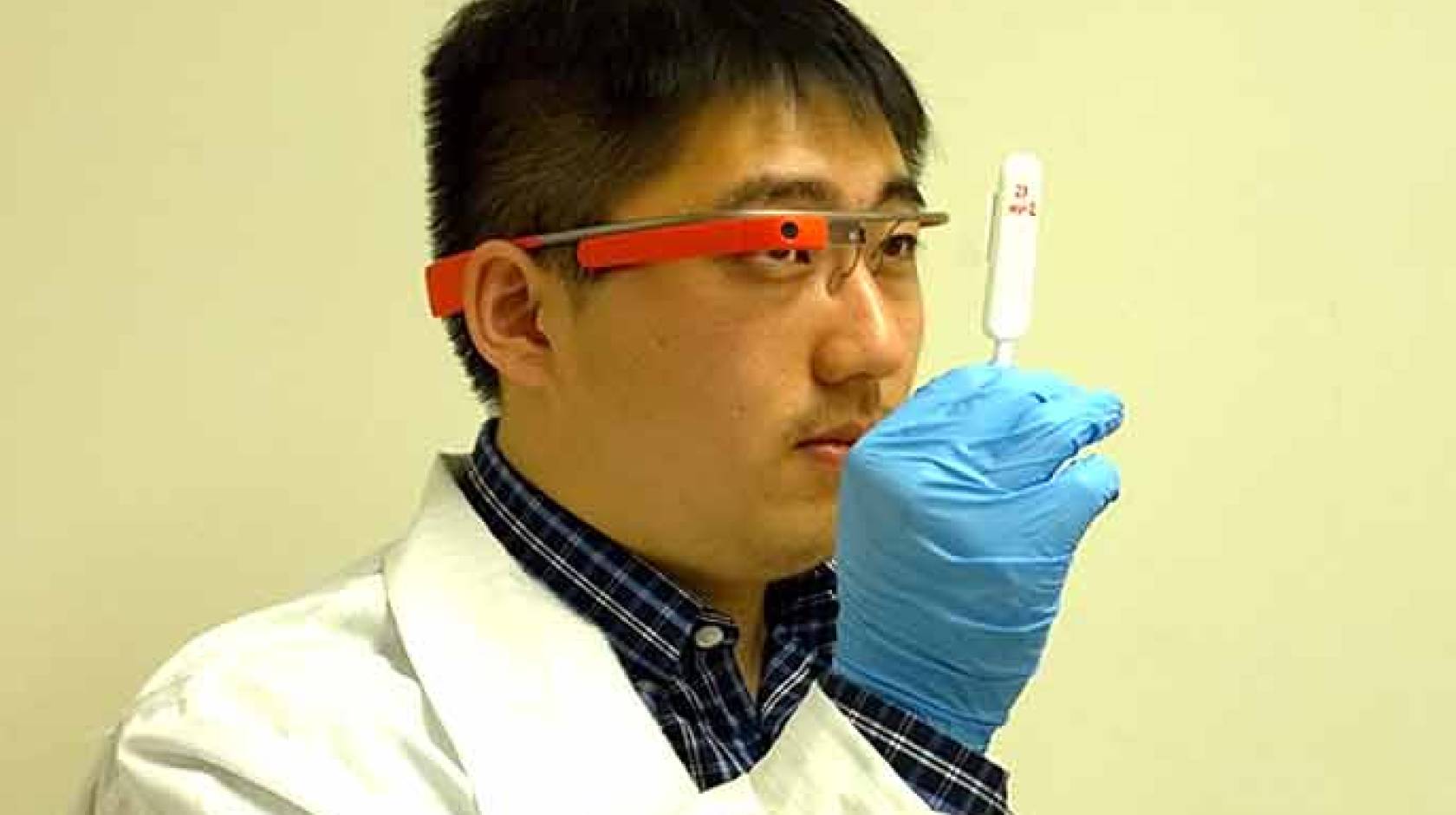

Bill Kisliuk, UCLA

A team of researchers from UCLA's Henry Samueli School of Engineering and Applied Science has developed a Google Glass application and a server platform that allow users of the wearable, glasses-like computer to perform instant, wireless diagnostic testing for a variety of diseases and health conditions.

With the new UCLA technology, Google Glass wearers can use the device's hands-free camera to capture pictures of rapid diagnostic tests (RTDs), small strips on which blood or fluid samples are placed and which change color to indicate the presence of HIV, malaria, prostate cancer or other conditions. Without relying on any additional devices, users can upload these images to a UCLA-designed server platform and receive accurate analyses — far more detailed than with the human eye — in as little as eight seconds.

The new technology could enhance the tracking of dangerous diseases and improve public health monitoring and rapid responses in disaster-relief areas or quarantine zones where conventional medical tools are not available or feasible, the researchers said.

"This breakthrough technology takes advantage of gains in both immunochromatographic rapid diagnostic tests and wearable computers," said principal investigator Aydogan Ozcan, the Chancellor's Professor of Electrical Engineering and Bioengineering at UCLA and associate director of UCLA's California NanoSystems Institute. "This smart app allows for real-time tracking of health conditions and could be quite valuable in epidemiology, mobile health and telemedicine."

The research is published online in the peer-reviewed journal ACS Nano.

In addition to designing the custom RDT–reader app for Google Glass, Ozcan's team implemented server processes for fast and high-throughput evaluation of test results coming from multiple devices simultaneously. Finally, the researchers developed a web portal where users can view test results, maps charting the geographical spread of various diseases and conditions, and the cumulative data from all the tests they have submitted over time.

To submit images for test results, Google Glass users only need to take photos of RTD strips or other commonly available in-home tests, then upload the images wirelessly through the device to the UCLA-designed web portal. The technology permits quantified reading of the results to a few-parts-per-billion level of sensitivity — far greater than that of the naked eye — thus eliminating the potential for human error in interpreting results, which is a particular concern if the user is a health care worker who routinely deals with many different types of tests.

To gauge the accuracy and efficiency of the technology, the UCLA team used an in-home HIV test designed by OraSure Technologies and a prostate-specific antigen test made by JAJ International. The researchers took images of tests under normal, indoor, fluorescent-lit room conditions. They submitted more than 400 images of the two tests, and the RDT reader and server platform were able to read the images 99.6 percent of the time. In every case in which the technology successfully read the images, it returned accurate and quantified test results, according to the team.

The researchers also tested more than 300 blurry images or images of the testing device taken under various natural-usage scenarios and achieved a read rate of 96.6 percent.

The first author of the paper is UCLA researcher Steve Feng, of the UCLA electrical engineering department. Other contributors include researchers Romain Caire, Bingen Cortazar, Mehmet Turan and Andrew Wong, all with UCLA's electrical engineering department.

Financial support for the Ozcan Research Group is provided by the Presidential Early Career Award for Scientists and Engineers, the Army Research Office Life Sciences Division, an ARO Young Investigator Award, the U.S. Army Tank Automotive Research, Development and Engineering Center, the National Science Foundation CAREER Award, the NSF CBET Division Biophotonics Program, an NSF Emerging Frontiers in Research and Innovation (EFRI) Award, the Office of Naval Research and a National Institutes of Health Director's New Innovator Award.